One of my favorite things about open source projects is the amazing humans behind it. OpenAPS came into existence because of numerous open source efforts; and has continued to evolve in both software and hardware improvements because of ongoing contributions in the open source world.

Some of the contributors and their stories are fairly well known (John Costik’s work to pull data from the CGM originally, which allowed Scott/me to create #DIYPS; Ben West’s work to study pump RF communications and create tools to communicate with the pump, in addition to his work on the building blocks that make up the openaps toolkit). Others have worked on areas that have drastically changed the trajectory of our community’s tools, as well. And two of the individuals who we also owe repeated thanks for facilitating our ability to utilize pocket-sized pancreas are Oskar Pearson and Pete Schwamb.

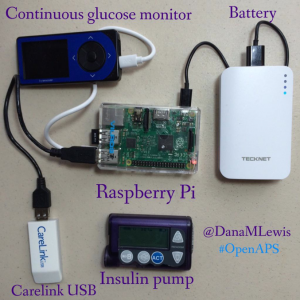

- Oskar wanted to find a way to replace the Carelink stick, which has dismal range. (How dismal? Back in the day, I used to have a Pi on the bedside table connected to a Carelink stick under the mattress, plus another Carelink hanging over the middle of my bed to try to keep me looping all night in case I rolled over.) He ultimately leveraged some of Ben and Pete’s other work and created “mmeowlink“, which enabled other radio sticks (think TI cc1111 stick & other radios using the cc1110 and cc1111 chipsets) to similarly communicate with our loopable pumps. He was also (I think) one of the first users of the Intel Edison for OpenAPS. When he shared his pictures showing the potential down sized rigs, jaws dropped across the Internet. This led to a bunch of new hardware options for OpenAPS rigs; Pi/Carelink was no longer the sole go-to. You could pick Pi/TI; Edison/TI; Pi/Slice of Radio; etc. And the range on these radio sticks is such that a single rig and radio stick can read (in most cases) across room(s). It greatly improved the reliability of real-world looping, and especially was a game changer for those on the go!

- Pete is a wizard in the world of device RF communications. He created the RileyLink (named after his daughter) to bridge communications between a phone and any Sub-1 GHz device (like say, an insulin pump…). But he’s also done some other stellar projects – like subg_rfspy (general purpose firmware for cc111x for sub-GHz RF comms, which is what is leveraged in mmeowlink); and also ccprog (which enables you to flash the cc1110 radio chip on an Explorer board (see below) without having any separate equipment). And, as someone who has been building boards and decoding RF stuff for years..he’s also incredibly generous with sharing his knowledge with other people building open source hardware boards, including with those of us who collaborated on the Explorer Board.

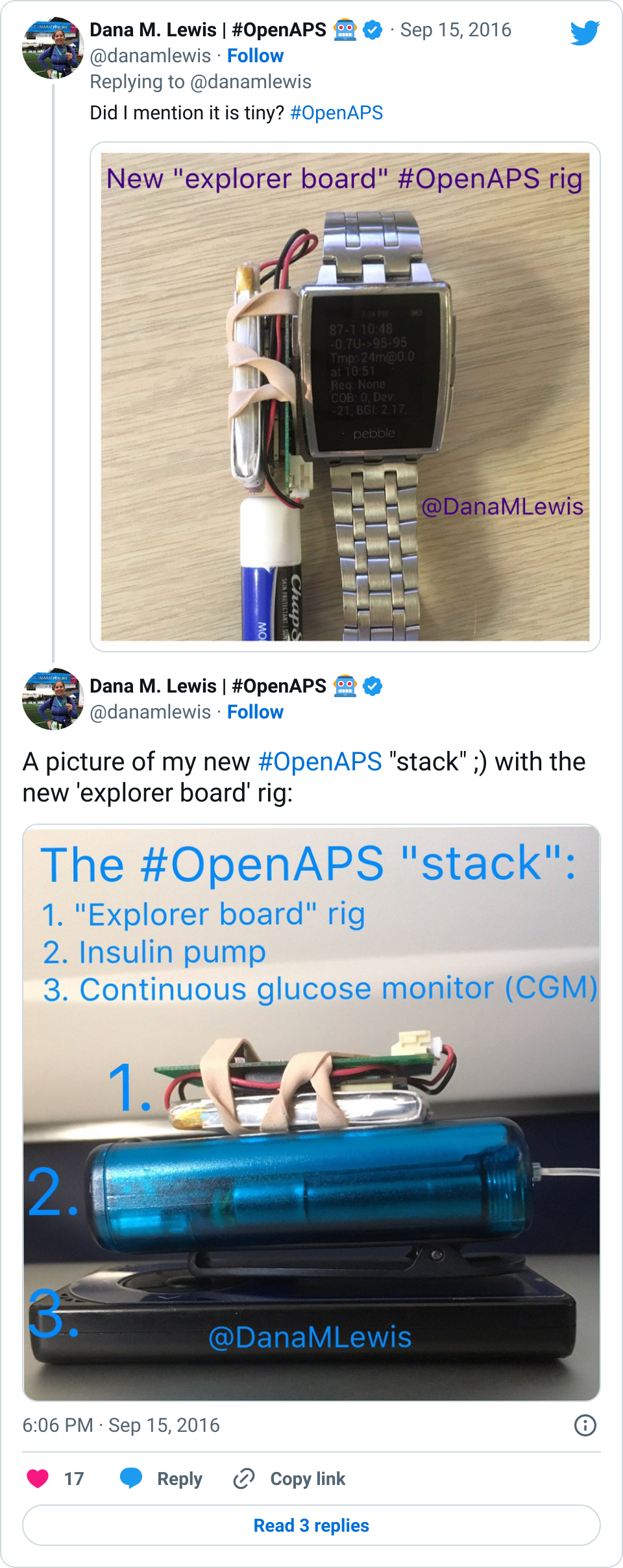

In addition, there have been other people outside the OpenAPS community who have been touched by our stories or by diabetes in their families and have also stepped up to contribute to open source projects. This is how the Explorer Board came into existence. Someone from Intel had stumbled across OpenAPS on Twitter and reached out to meet up with Scott and me when he was in Seattle; he invited a hardware board designer he knew (Morgan Redfield) to stop by the meetup and offered to help initiate development of a smaller board. And amazingly, that’s exactly what happened. Morgan collaborated with us & others like Pete to design, build, and iterate on a small, open source hardware board (the “900 Mhz Explorer Board“) for the Edison that had a built in cc1110 radio, further allowing us to reduce the size of our rigs.

Eventually others at Intel heard about this collaboration, and we (OpenAPS and Morgan) were nominated for the Edison Foundation’s 2017 Edison Innovation Award for “Best Use of the Intel Edison Module”.

I was blown away to find out tonight that we are honored to actually receive the award on behalf of everyone who made these projects possible. I’m incredibly proud of this community and the dozens of people who have contributed in so many ways to a) make DIY artificial pancreases a thing and b) make it more feasible for hundreds of people to be DIYing these themselves with open source software and hardware. (And, this is very much in line with Thomas Edison’s work – the Edison Foundation spoke tonight about how Edison really created the group collaboration and innovation process!)

Big thanks to Intel and the Edison Foundation for highlighting the community’s efforts…and endless hugs and ongoing appreciation for everyone who has contributed to OpenAPS and other #WeAreNotWaiting projects!

. So this post explains some of the

. So this post explains some of the

Recent Comments