I’ve written about Open Humans before; both in terms of how we’re creating Data Commons there for people using Nightscout and DIY closed loops like OpenAPS to donate data for research, as well as building tools to help other researchers on the Open Humans platform. Madeleine Ball asked me to share some more about the background of the community’s work and interactions with Open Humans, along with how it will play into the Opening Pathways grant work, so here it is! This is also posted on the OpenHumans blog. Thanks, Madeleine, and Open Humans!

—

So, what do you like about Open Humans?

Health data is important to individuals, including myself, and I think it’s important that we as a society find ways to allow individuals to be able to chose when and how we share our data. Open Humans makes that very easy, and I love being able to work with the Open Humans team to create tools like the Nightscout Data Transfer uploader tool that further anonymizes data uploads. As an individual, this makes it easy to upload my own diabetes data (continuous glucose monitoring data, insulin dosing data, food info, and other data) and share it with projects that I trust. As a researcher, and as a partner to other researchers, it makes it easy to build Data Commons projects on Open Humans to leverage data from the DIY artificial pancreas community to further healthcare research overall.

Wait, “artificial pancreas”? What’s that?

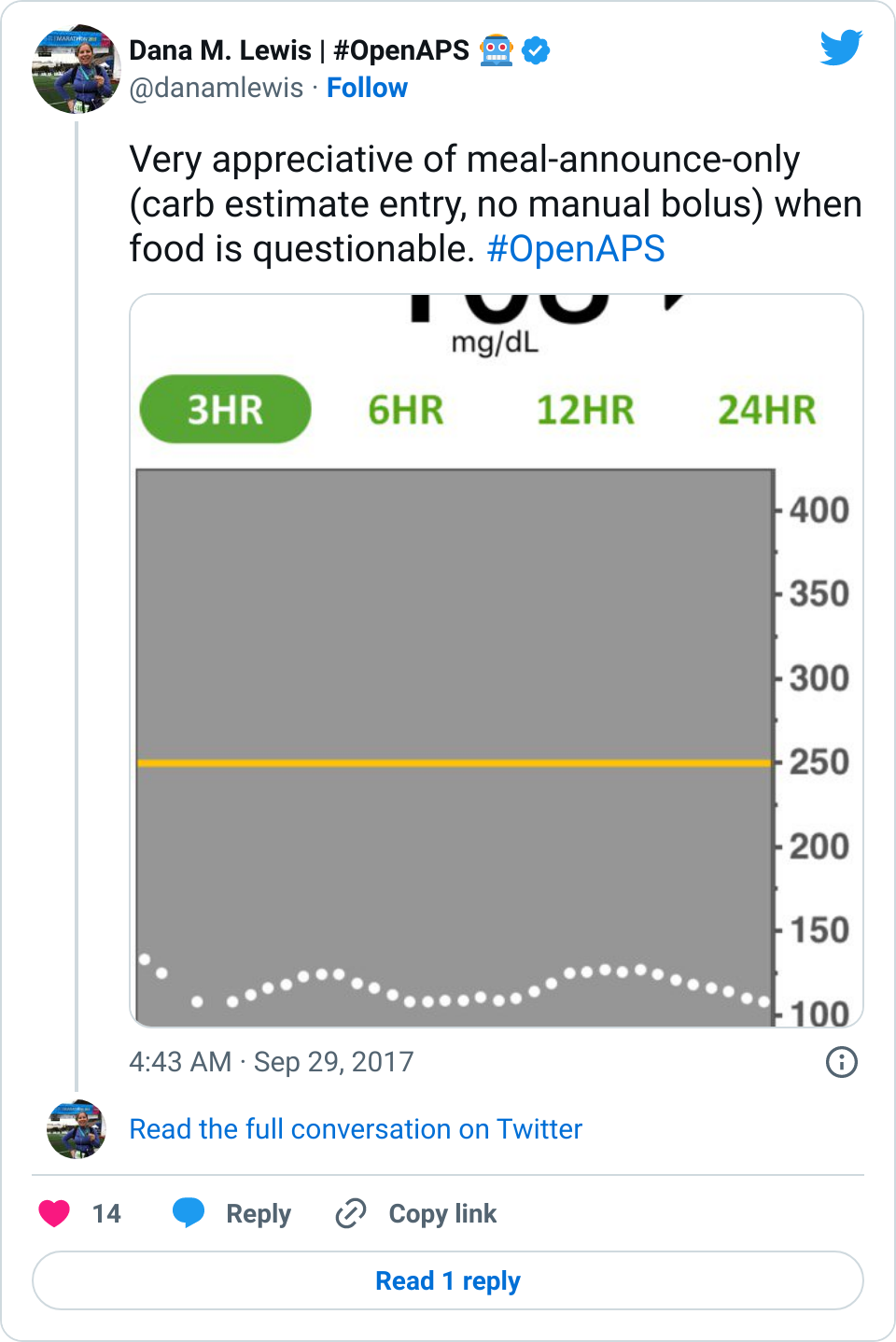

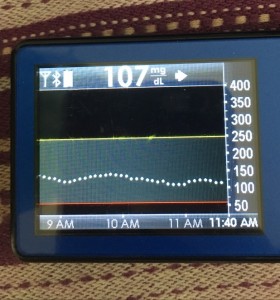

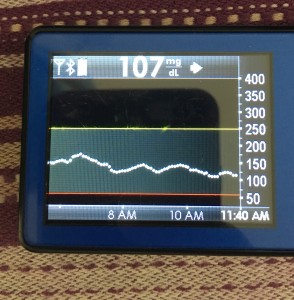

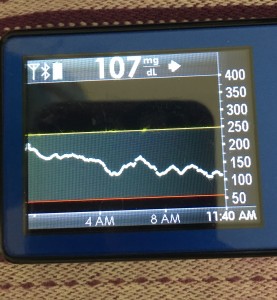

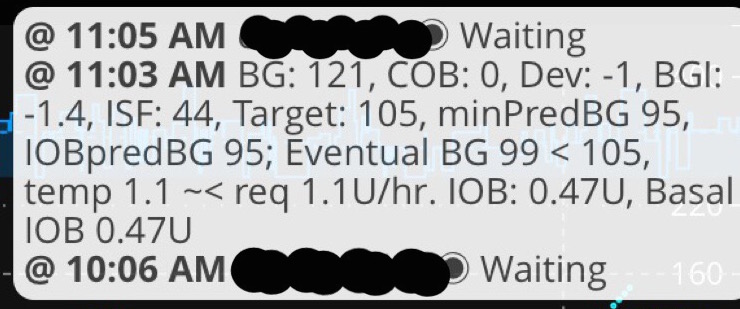

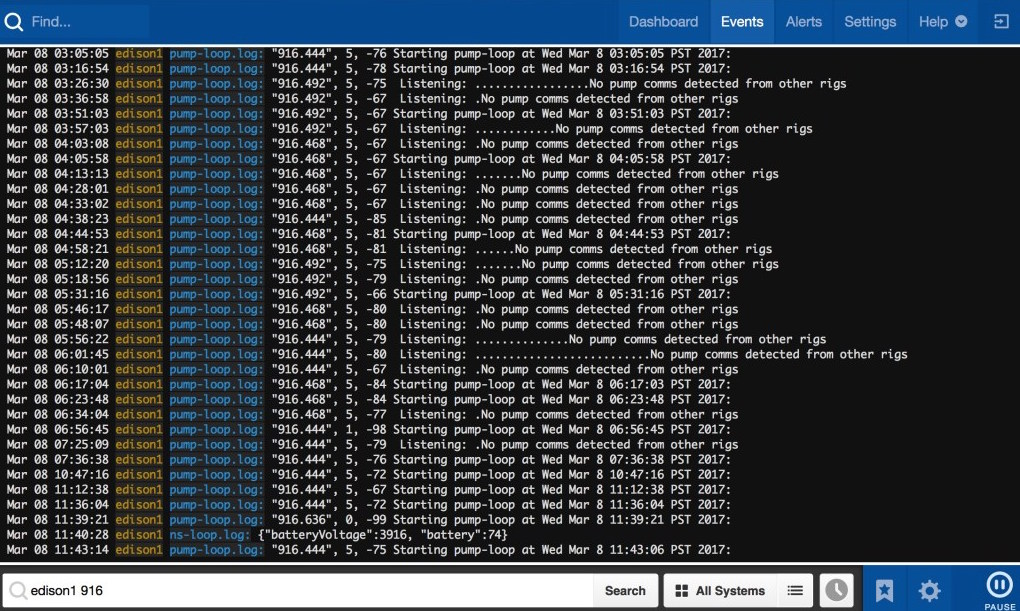

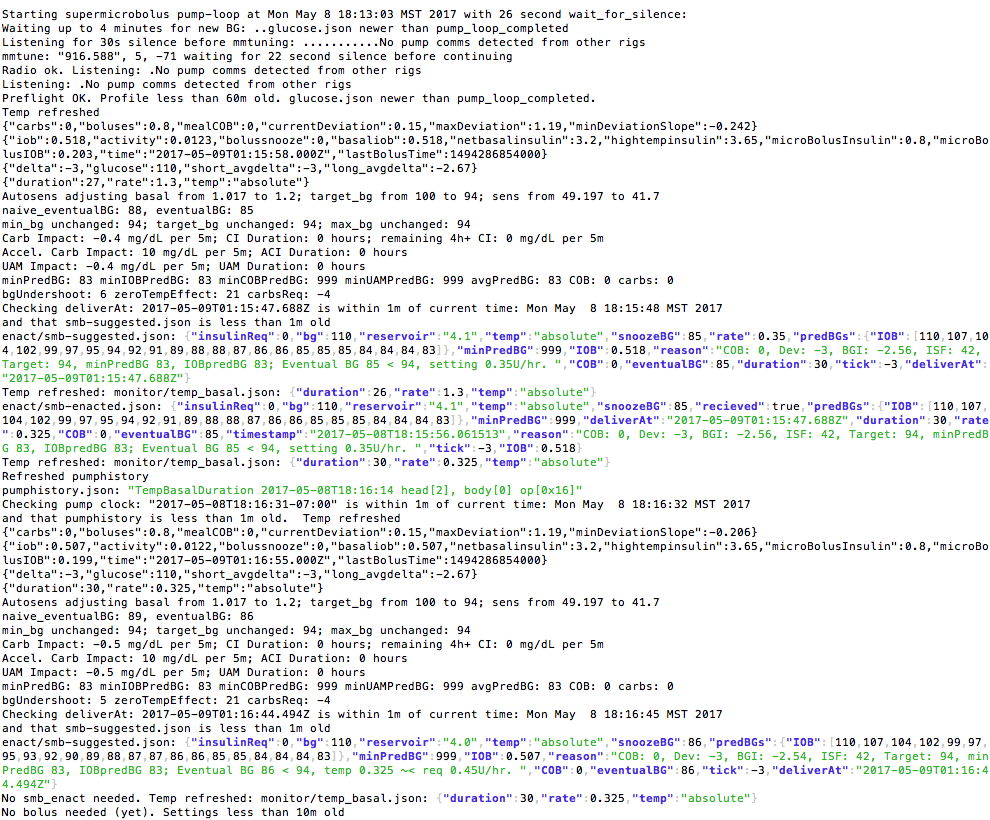

I helped build a DIY “artificial pancreas” that is really an “automated insulin delivery system”. That means a small computer & radio device that can get data from an insulin pump & continuous glucose monitor, process the data and decide what needs to be done, and send commands to adjust the insulin dosing that the insulin pump is doing. Read, write, read, rinse, repeat!

I got into this because, as a patient, I rely on my medical equipment. I want my equipment to be better, for me and everyone else. Medical equipment often isn’t perfect. “One size fits all” really doesn’t fit all. In 2013, I built a smarter alarm system for my continuous glucose monitor to make louder alarms. In 2014, with the partnership of others like Ben West who is also a passionate advocate for understanding medical devices, I “closed the loop” and built a hybrid closed loop artificial pancreas system for myself. In early 2015, we open sourced it, launching the OpenAPS movement to make this kind of technology more broadly accessible to those who wanted it.

You must be the only one who’s doing something like this

Actually, no. There are more than 400+ people worldwide using various types of DIY closed loop systems – and that’s a low estimate! It’s neat to live during a time when off the shelf hardware, existing medical devices, and open source software can be paired to improve our lives. There’s also half a dozen (or more) other DIY solutions in the diabetes community, and likely other examples (think 3D-printing prosthetics, etc.) in other types of communities, too. And there should be even more than there are – which is what I’m hoping to work on.

So what exactly is your project that’s being funded?

I created the OpenAPS Data Commons to address a few issues. First, to stop researchers from emailing and asking me for my individual data. I by no means represent all other DIY closed loopers or people with diabetes! Second, the Data Commons approach allows people to donate their data anonymously to research; since it’s anonymized, it is often IRB-exempt. It also makes this data available to people (patient researchers) who aren’t affiliated with an organization and don’t need IRB approval or anything fancy, and just need data to test new algorithm features or investigate theories.

But, not everyone implicitly knows how to do research. Many people learn research skills, but not everyone has the wherewithal and time to do so. Or maybe they don’t want to become a data science expert! For a variety of reasons, that’s why we decided to create an on-call data science and research team, that can provide support around forming research questions and working through the process of scientific discovery, as well as provide data science resources to expedite the research process. This portion of the project does focus on the diabetes community, since we have multiple Data Commons and communities of people donating data for research, as well as dozens of citizen scientists and researchers already in action (with more interested in getting involved).

What else does Open Humans have to do with it?

Since I’ve been administering the Nightscout and OpenAPS Data Commons, I’ve spent a lot of time on the Open Humans site as both a “participant” of research donating my data, as well as a “researcher” who is pulling down and using data for research (and working to get it to other researchers). I’ve been able to work closely with Madeleine and suggest the addition of a few features to make it easier to use for research and downloading large data sets from projects. I’ve also been documenting some tools I’ve created (like a complex json to csv converter; scripts to pull data from multiple OH download files and into a single file for analysis; plus writing up more details about how to work with data files coming from Nightscout into OH), also with the goal of facilitating more researchers to be able to dive in and do research without needing specific tool or technical experience.

It’s also great to work with a platform like Open Humans that allows us to share data or use data for multiple projects simultaneously. There’s no burdensome data collection or study procedures for individuals to be able to contribute to numerous research projects where their data is useful. People consent to share their data with the commons, fill out an optional survey (which will save them from having to repeat basic demographic-type information that every research project is interested in), and are done!

Are you *only* working with the diabetes community?

Not at all. The first part of our project does focus on learning best practices and lessons learned from the DIY diabetes communities, but with an eye toward creating open source toolkit and materials that will be of use to many other patient health communities. My goal is to help as many other patient health communities spark similar #WeAreNotWaiting projects in the areas that are of most use to them, based on their needs.

How can I find out more about this work?

Make sure to read our project announcement blog post if you haven’t already – it’s got some calls to action for people with diabetes; people interested in leading projects in other health communities; as well as other researchers interested in collaborating! Also, follow me on Twitter, for more posts about this work in progress!

Recent Comments