When you’re dealing with a challenging health situation, it can be hard. Hard because of what you are dealing with, and hard because you need to navigate getting and seeking care. That typically looks like going to a doctor, getting the doctor to understand the problem, and then finding solutions to deal with the problem.

Each of those has their own challenges. You may not have a doctor that specializes in the area you need. For example, you may not have a primary care doctor when you have strep throat, and have to go to urgent care instead. Or maybe you develop a problem with your lungs and need a pulmonologist, but that requires a referral from someone and several months’ wait to be seen.

Once you face that challenge and are in fact seen by the specializing provider (and hopefully the problem you have is in fact the one this specialist can address, rather than referring you on to a different kind of specialist), you have to figure out how to communicate and show what issues you have to the doctor. In some cases, it’s really obvious. You have a red, angry throat which leads to the doctor ordering a strep test. Or you go to the dermatologist for a skin check because you have a mole that is changing, and you get a skin check and a biopsy of the mole. Problem identified and confirmed.

It takes identifying and confirming the problem, and usually diagnosing it, to then reach the stage of addressing it, either with symptom management or with curing or fixing or eliminating the source.

But…what happens when you and your doctor can’t define the problem: there is no diagnosis?

That’s a challenging place to be. Not only because you have a problem and are suffering with it, but also, the path forward is uncertain. No diagnosis often means no treatment plan, or the treatment plan itself is uncertain or delayed.

No diagnosis means that even if your provider prescribes a treatment option, it may get denied by the insurance company because you don’t have the clinical diagnosis for which the treatment is approved for. Maybe your doctor is able to successfully appeal and get approval for off-label use, or maybe not.

And then, there’s no certainty that the treatment will work.

So. Much. Uncertainty. It’s hard.

It’s also made hard by the fact that it’s hard to tell people what’s going on. A broken leg, or strep throat, or a suspicious mole: these are things that are relatively easy to explain to other people what is going on, what it means, how it might be treated, and what a rough expectation of timeline for resolution is.

Most stories are like this. There’s a story arc, a narrative that has a beginning, middle, and an end.

With the uncertain health situations I’m describing, it’s often never clear if you’ve even reached “the middle”, or what the end will be…or if there even is an end. Certainly no guarantee of a happy ending, or an ending at all if you have been diagnosed with a chronic, lifelong disease.

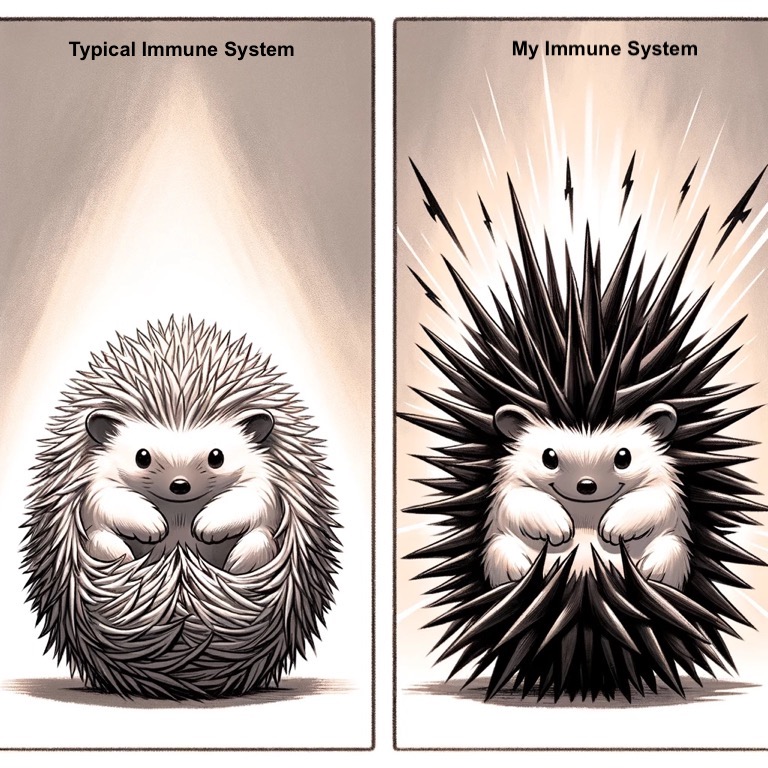

I’ve been there (here) many times, now living with more than a handful of autoimmune diseases that I’ll have for life. But the first few were relatively “simple” to diagnose, treat, and understand what they looked like. For example, with type 1 diabetes, the symptoms of weight loss, excessive urination, incredible thirst, etc. led to a blood test confirming high glucose, an A1c test confirming it had been high for months, and a diagnosis of type 1 diabetes. The treatment is managing glucose levels with insulin therapy, presumably for the rest of my life. (22 years and counting, here). It makes things challenging, but it’s something I can explain to other people for the most part what it means and how it does or does not impact my life.

Lately, though, I’ve found more uncertainty. And that makes it hard, because if there is no diagnosis then there is no clear explanation. No certainty, for myself or to give my loved ones. Which makes it feel isolating and hard psychologically, in addition to the physical ramifications of the symptoms themselves.

There’s a saying in medicine: “When you hear hoofbeats, think horses, not zebras.” It’s a reminder for clinicians to consider common explanations first, rather than go straight to explanations of rare conditions. Most of the time, the advice is helpful—common issues should be ruled out before rarer ones.

In my case, that’s what we did. We ruled out every possible common condition…and then pretty much all the rare ones. So what do you do, when your symptoms don’t match the pattern of a horse…or a zebra?

You might have an uncommon presentation of a common disease or a common presentation of a rare disease.

Either way, whether horse or zebra, the symptoms cast a shadow. They’re real.

Whether the animal in question has stripes or not, you’re still living with the impact. What makes this even harder is that many diagnostic processes rely on pattern recognition, yet undiagnosed conditions often defy easy patterns. If your symptoms overlap with multiple conditions—or present in a way that isn’t fully typical—then the search for answers can feel like trying to describe a shadow, not the thing itself.

And shadows are difficult to explain.

This makes a meta-challenge on top of the challenge of the situation, which is trying to explain the unexplainable. This is crucial not just for helping your doctors understand what is going on, so we can improve the diagnostic pursuit of answers or gauge the efficacy of hand-wavy treatment plans meant to do something, anything, to help… and it’s also crucial for explaining to your friends and family what is going on, and what they can do to help.

We often want to see or hear health stories in the format of:

- Here’s the problem.

- Here’s what I’m doing about it.

- Here’s how I’m coping or improving.

- Bonus: here’s how you can help

I’ve seen so many examples of friends and family responding to the call for help, for me and for others in health situations. I know the power of this, which is why when you can’t explain what’s going on, it makes it challenging to ask for help. Because it’s hard to explain the “what” and the “why”: you are only left with the “so what” of ‘here’s what the end result is and how I need help’.

(And if you’re like me, a further challenge is the situation being dynamic and constantly changing and progressing, so what help you might need is a constant evolution.)

You might also feel like you shouldn’t ask for help, because you can’t explain the what and the why. Or because it is ongoing and not clear, you may want to ‘reserve’ asking for help for later ‘when you really need it’, even if you truly do need help then and there at that point in time. As weeks, months, or even years drag on, it can be challenging to feel like you are burdening your loved ones and friends.

But you’re not.

The best meta explanation and response to my attempts to communicate the challenges of the meta-challenge of the unexplainable, the uncertainty, the unending saga of figuring out what was going on and how to solve it, came from Scott (my husband). We’ve been married for 10 years (in August), and he met me when I had two of my now many autoimmune diseases. He knew a bit of what he was getting in to, because our relationship evolved and progressed alongside our joint interests in problem solving and making the world better, first for me and then for anyone who wanted open source automated insulin delivery systems (aka, we built OpenAPS together and have spent over a decade together working on similar projects).

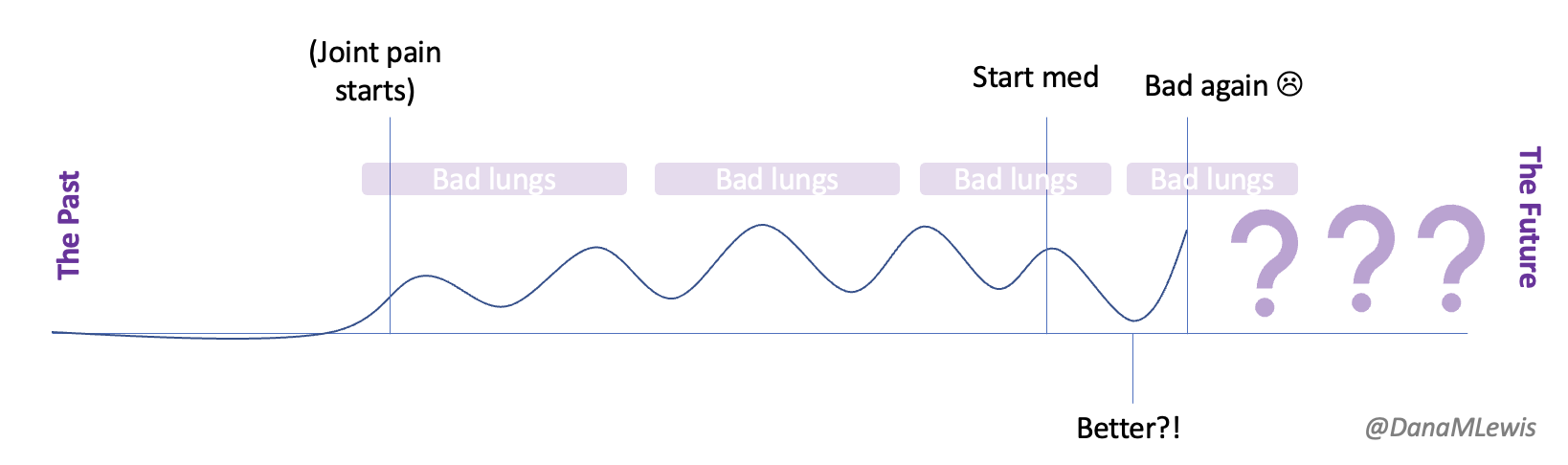

That being said, to me it has felt drastically different to be living with ‘understandable’ chronic autoimmune diseases like type 1 diabetes and celiac, and this latest saga where it’s unclear if it’s an extension of a known autoimmune disease (presenting and progressing atypically) or if it’s a new, rare autoimmune or other type of disease. So much is unknown. So many challenges. When we would adapt and address one problem or challenge, it evolved and needed another solution, or another problem cropped up. I’m honestly at this point exhausted of adaptations and problem solving. I’m tired of asking, seemingly endlessly, for help and support. Amazingly, Scott does not seem exhausted by it or tired of me, whereas many people would be. And he said something a few weeks ago, completely off the cuff and unplanned that really resonated with me. I was talking, again (he’d heard this many times), about how hard this all has been and is, and that I was also aware of the effect it has on him and on our relationship. I can’t do all the things I did before, or in the way I did before, so it’s changed some of what we do, where we go, and how we are living our lives. I’m having a hard time with that, and it would be natural for him to have similar feelings. (And frustrations, because if I feel frustrated with being out of control and unable to change the situation and fix it, so too would he be except worse secondhand because it’s so hard to love someone and not be able to help them!)

But what he said literally stopped me in my tracks after he said it, because we were out walking and I had to physically stop after he said it to process it in my heart (and leak some tears from my eyes).

It was something along the lines of:

“You’re not an anchor, you’re a sail.”

Meaning, to him, I’m not holding him back from living his life (as I was and am concerned about).

He continued by saying:

“Yes, the sail is a little cattywampus sometimes, but you’re still a sail that catches wind and takes us places. It’s much more interesting to let you sail us, even in a different direction, than to be without a sail.”

(Yes, you can pause and tear up, I do again just thinking about how meaningful that was.)

What a hit, in the most wonderful way, to my heart, to hear that he doesn’t see me and all these challenges as an anchor. He recognizes them, and that we are dealing with them, but he’s willing and wanting us to sail in the direction they take us, even when that makes us go in some unplanned directions.

Probably some of this is personality differences: I love to plan. I love spreadsheets. I love setting big goals and making spreadsheets of processes and how I’ll achieve them. In the current situation, I can’t make (many) plans, there are no spreadsheets or processes or certainty or clear paths forward. We’re in an ocean of uncertainty, with infinite paths ahead, and even if I set sail in a certain direction…I’m a cattywampus sail that may result in a slightly different direction.

But.

Knowing I’m a cattywampus sail, and not an anchor, has made all the difference.

If you’re reading this and dealing with an uncertain health situation (undiagnosed, or diagnosed but untreated, or diagnosed but with no certainty of what the future looks like), you may feel like you’re a boat adrift in the middle of an ocean. No land in sight. No idea which way the wind will blow you.

But.

You’re a boat with a sail. Maybe a cattywampus one, and maybe you’re going to sail differently than everyone else, but you probably are going to still sail. Somewhere. And your family and friends love you and will be happy to go whichever direction the wind and the cattywampus sail take you.

If you’re reading this and you’re the friend or family of a loved one dealing with an uncertain situation, first, thank you. Because you clearly love and support them, even through the uncertainty. That means the world.

You may not know how to help or be able to help if they need help, but communicating your love and support for them alone can be incredibly meaningful and impactful. If you want, tell them they’re a sail and not an anchor. It may not resonate with them the way it resonated with me, but if you can, find a way to tell them they and their needs are not a burden, that life is more interesting with them, and that you love them.

This has become a long post, with no clear messages or resolutions, which in of itself is an example of these types of situations. Hard, uncertain, messy, no clear ending or answer or what next. But these types of situations happen a lot, more than you know.

This has become a long post, with no clear messages or resolutions, which in of itself is an example of these types of situations. Hard, uncertain, messy, no clear ending or answer or what next. But these types of situations happen a lot, more than you know.

If you’re going through this, just know you’re not alone, you’re loved and appreciated, and you’re a sail rather than an anchor, whether you’re a zebra or a horse or a zebra-colored horse or a horse-shaped zebra shadow.

—

PS – I’ll also share one specific thing, for loved ones and friends, as something that you can do if you find out about a situation like this.

If someone trusts you and communicates part or all of their situation, and they specifically tell you in confidence that they are not sharing it publicly or with anyone else or with X person or Y group of people…honor that trust and request not to communicate that information. They have a reason, if not multiple reasons, for asking. When dealing with uncertain health situations, we can control so little. What we can control, we often want to, such as choosing when and how and to whom to communicate about our challenges and situations.

If someone honors you by telling you what’s going on and asks you not to tell other people – honor that by not disabusing the trust in your relationship. Yes, it can be hard to keep it to yourself, but it’s likely about 1% hard of what they are dealing with. Passing on the word becomes a game of telephone that garbles what is going on, often turns out to be passed on incorrectly, and causes challenges down the line…not in the least because it can harm your relationship with them if they perceive you have violated their trust by explicitly passing on information you asked them not to. And that, on top of everything else, can make a challenging situation more so, and it may then later influence how they want to communicate with others, potentially shutting down other avenues of support for them. So please, respect the wishes of the person, even if it’s hard for you. You can always ask “can I share this with so and so”, but respect if the answer is no, even if you would do something different in your situation. Because, after all, it is not your situation. You’ve been invited on the boat, but you are not the sail.

As an example for how I like to disseminate my articles personally, every time a journal article is published and I have access to it, I updated

As an example for how I like to disseminate my articles personally, every time a journal article is published and I have access to it, I updated

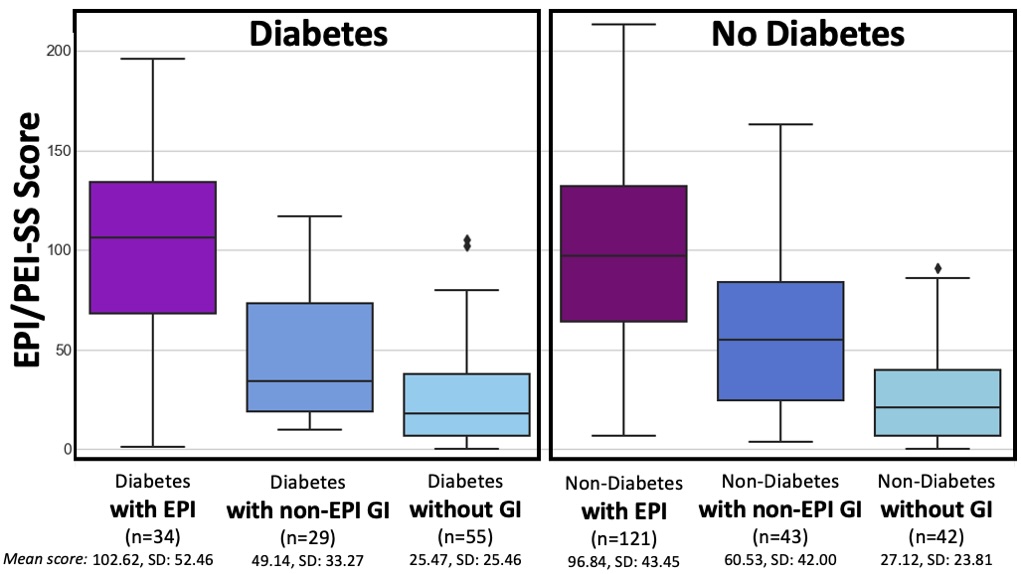

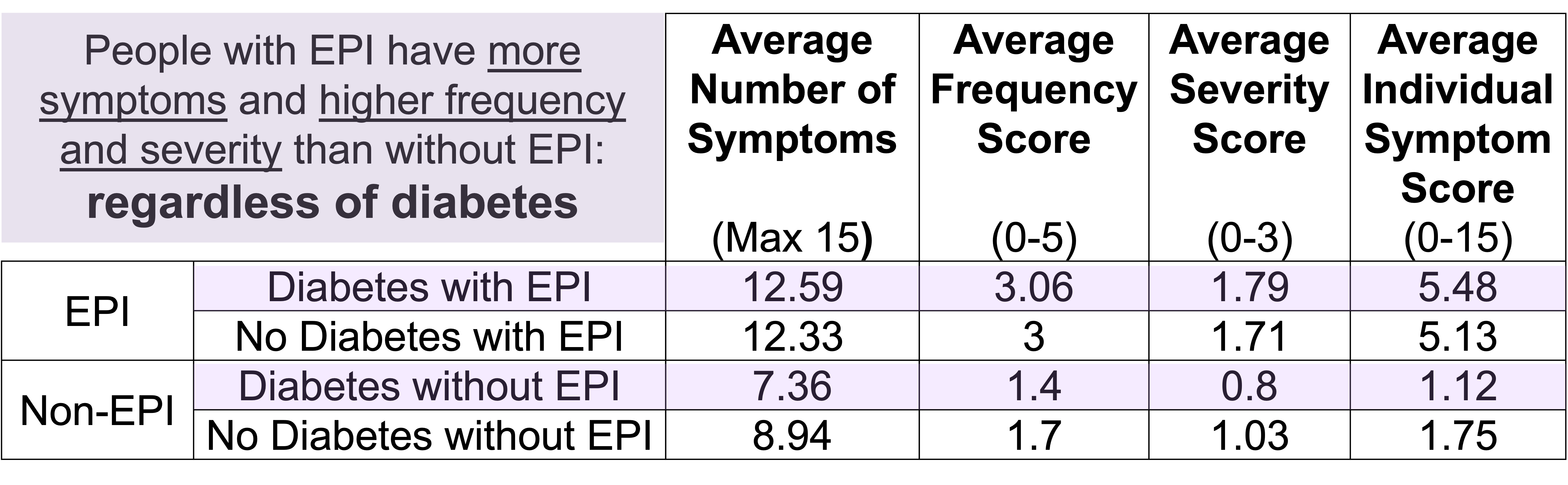

How do you have people take the EPI/PEI-SS? You can pull this link up (

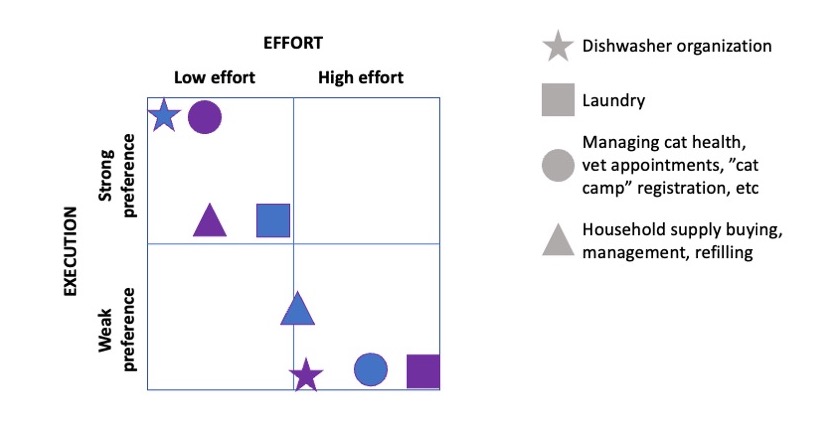

How do you have people take the EPI/PEI-SS? You can pull this link up ( This came to mind because he went on a work trip, and I stuck things in the dishwasher for 2 days, and jokingly texted him to “come home and do the dishes that the raccoon left”. He came home well after dinner that night, and the next day texted when he opened the dishwasher for the first time that he “opened the raccoon cage for the first time”. (LOL).

This came to mind because he went on a work trip, and I stuck things in the dishwasher for 2 days, and jokingly texted him to “come home and do the dishes that the raccoon left”. He came home well after dinner that night, and the next day texted when he opened the dishwasher for the first time that he “opened the raccoon cage for the first time”. (LOL).

Recent Comments