What do you see when you see (or think of) diabetes?

—

In my house, I see small piles of low treatments (for hypoglycemia) in every place that I hang out. On my desk next to my computer. In my bedside table. On the counter next to the door where I grab them before heading out for a run or a walk. On the edge of the bathtub in my shower, because low blood sugars happen everywhere.

Sometimes, one of my nephews spots them in a translucent pocket on my shorts. His brain sees candy at first, not a medical treatment. Which is fine – he’s young. He’s learning that for Aunt Dana, they’re not “candy” or a “treat” – they’re a medical treatment.

All of the nieces and nephews have learned or are learning that Aunt Dana has “robot parts”, which is how they see my pump clipped to my pocket or waist band or the hard lump (CGM sensor) they feel or see on my arm.

—

What I hope people see, though, is that diabetes is not a death sentence. Thanks to improvements in insulin, insulin delivery, and blood glucose measuring, it’s no longer visibly tied to possible complications of diabetes, like amputations, kidney dialysis, or loss of vision. That is what I saw when I was diagnosed with diabetes in 2002, and what was presented to me.

I hope instead that people see people with diabetes like me living our lives, running 82 mile ultramarathons (for those of us who wish to do that), experiencing pregnancy (for those who wish to do that), achieving our career goals, living life in whatever ways we want to live our lives. Just like everyone else.

—

It’s worth noting that when typing this, autocorrect in my first sentence suggested “treat” instead of “treatment”.

That’s how computers “see” diabetes, too, with sugar and carbs equivalent with diabetes. Despite the fact that medical research shows that diabetes is a complicated combination of genetics, immune system shenanigans (my words), and numerous other factors not in a person’s control, humans haven’t gotten that message. People are still stigmatized and joked about.

So computers learn that. And that’s what they see.

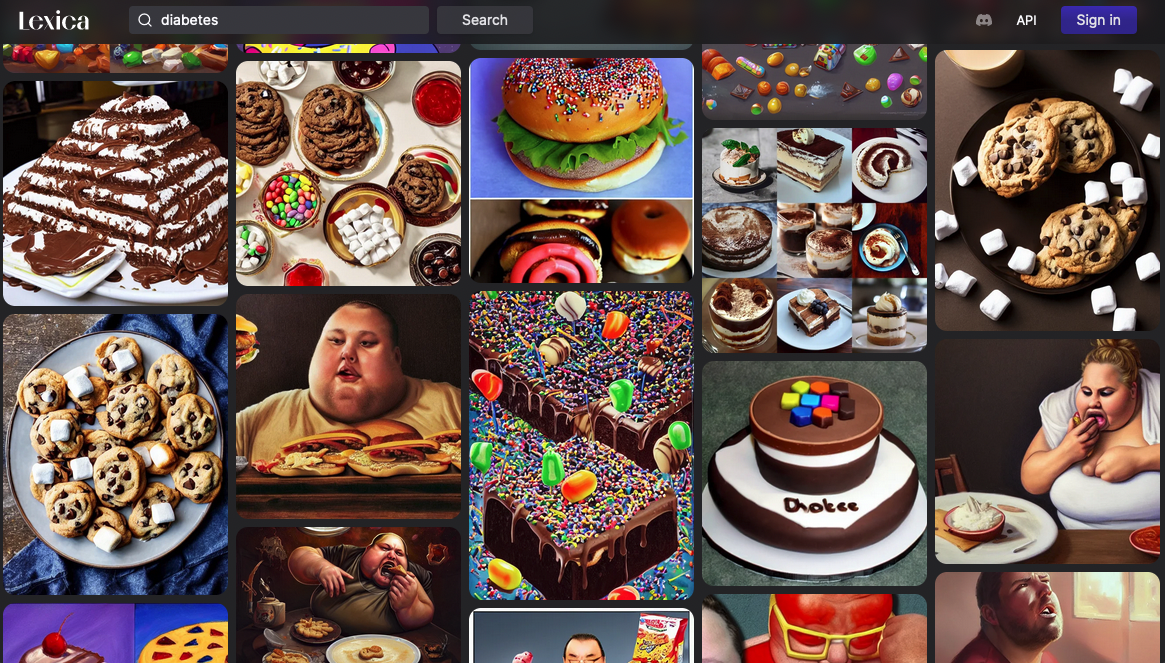

When I was testing Stable Diffusion (an open source AI tool for generating images) recently, I learned about a site “Lexica” that shows you what other people have generated with similar key words. I thought it would be interesting to get ideas for better images to visualize concepts in posts about diabetes, so I searched diabetes.

I should’ve known better. Humans say and think “diabetes” in response to seeing pictures of carbohydrates, so that’s what computers learn.

AI doesn’t know any better because humans haven’t taught themselves any better.

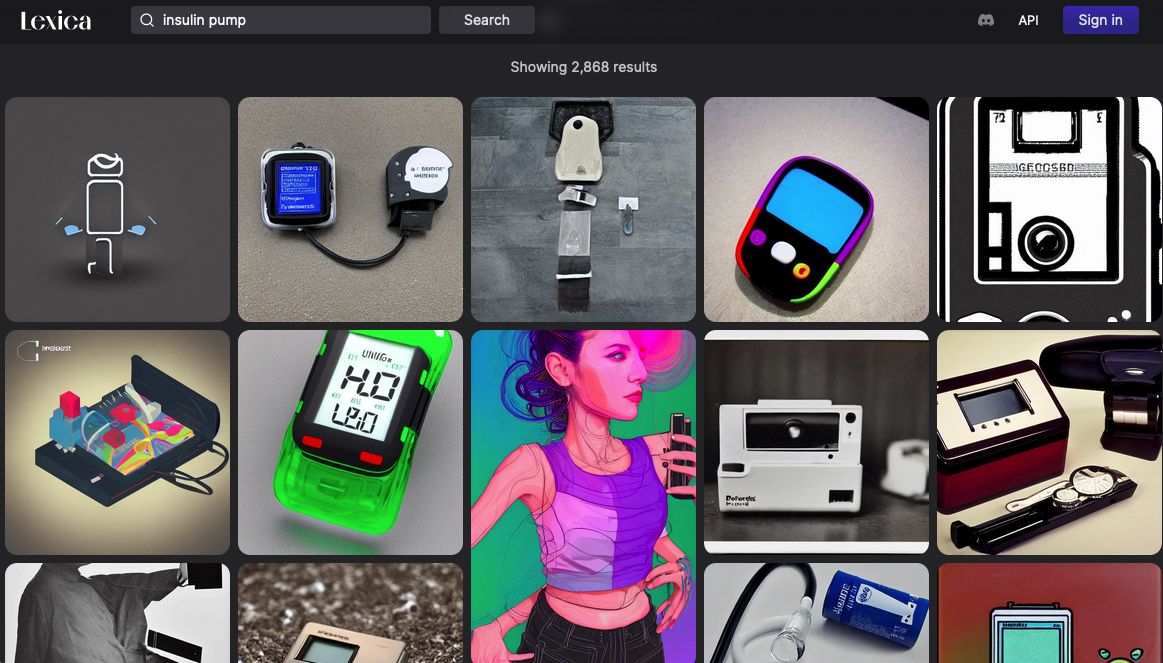

Sadly, “insulin pump” as a key word is disheartening in a different way.

There are so few existing visuals and images of people with insulin pumps that the visual images generated by AI are a mix of weird hybrid old school computer components and blood glucose monitors or other medical devices.

“Hypoglycemia” mostly generates cartoons in foreign languages or made up languages that I’m guessing are jokes by people without diabetes about having low blood sugar and using it as an excuse for various things. “Hyperglycemia” brings a mix of the hypoglycemia-style cartoons and the diabetes-style images of carbs and how the AI thinks people with diabetes all look.

I’ve noticed this with AI-writing tools, too. AI is good at completing your sentence or writing a few sentences based on well known concepts and topics that already exist today. It’s not yet good at helping you write content about new concepts or building on existing content.

It’s trained on the content of today and the past, which means all of the biases, stereotypes, and stigmatizing content that aren’t good today are also extrapolated into our future with AI.

I don’t have all the answers or solutions (I wish I did), but I want to flag this as a problem. We can’t expect AI to do better trained on what we have and do today, because what we do today (stigmatize, stereotype, and harm people living with chronic diseases) is not ok and not good enough.

We need to change today and train AI with different inputs in order to get different outputs.

That starts with us changing our behavior today. As I wrote a few days ago, please speak up when you see chronic diseases being used as a “joke” and when we see people being stereotyped or when we see racism occurring.

It’s hard, it’s uncomfortable – both to speak up, and to be corrected.

I’ve been corrected before, on verbal patterns and phrases I learned from society that I didn’t realize were harmful and stigmatizing to other people.

I’m working on learning to say “I’m sorry, you’re right, and let me learn from this” and trying to do better in the future, living up to my statement that I’m going to learn from that moment.

It can absolutely be done. It desperately needs to be done, by all of us.

We can course-correct, whether it’s in a one on one conversation, something we see in a small social network in social media, or even in a large room at a conference.

I still remember and appreciate greatly when I flagged that a diabetes joke was made at a conference on stage over four years ago. Upon hearing the joke, I noted that half the room laughed; and that it wasn’t ok. So I spoke up on Twitter, because I was live tweeting from the conference. I didn’t think much would come from it. But it did. Amazingly, it did.

John Wilbanks saw my tweet, realized it wasn’t ok, and instead of tweeting support or agreement (which also would have been great), took an amazing, colossally huge and unexpected step. He literally got up from his seat, went to the microphone, and interrupted the panel that had moved on to other topics. He called out the fact that diabetes was used as a joke a few minutes prior and that it wasn’t ok.

He put on a master class for how to speak up and how to use his power to intervene.

It was incredibly powerful because although the “joke” had gone over most people’s heads and they didn’t think it was a big deal, he brought attention to the fact that it had happened, was hurtful and harmful, and created a moment for reflection for the entire room of hundreds of people.

We need more of this.

When someone flags that they are being stereotyped, stigmatized, being discriminated against – we need to speak up. We need to support them.

It matters not just for today (although it matters incredibly much for today, too) but also for the future.

AI (artificial intelligence) learns from what we teach it, much like our children learn from what we teach and show them. I don’t have kids, but I know what I do and how I behave matters to my nieces and nephews and how they see the future.

We need to understand that AI is learning from what we are doing today, and what we do today matters. It should be enough to want to not be racist, discriminating, stereotyping, and harmful to other people today. But it’s not enough.

The loudest voices are often the ones establishing “normal” for our culture, our children, and the AI systems that may be running much of the world before our children graduate college. We need to speak up to help shape the conversation today, because what we are doing today is teaching our children, our technology, and is what we’ll get in the future, ten-fold.

And I want the future to look different and be better, for all of us.

Recent Comments